Who’s Wearing the Glasses?

User authentication and identification using head movements on smart glasses

🎯 Motivation

Smart glasses are gaining traction across domains like healthcare, education, and industrial training - yet they lack robust, user-friendly methods for personal authentication. Touch-based or alphanumeric methods (e.g., PINs) are often infeasible due to limited or no interface. Linking them to mobile devices is not only inconvenient but also introduces pairing dependencies and security vulnerabilities.

This project addresses a critical gap: How can we design an intuitive, privacy-preserving, and on-device authentication mechanism for smart glasses that does not rely on external devices or intrusive biometrics?

We hypothesize that head movement patterns, captured via built-in inertial sensors (IMU), can serve as behavioral biometrics unique to individuals. This approach has several advantages:

- ✅ Device-native: No need for extra hardware or external sensors

- ✅ Passive and natural: Users only perform simple, intuitive gestures

- ✅ Lightweight: Designed with edge deployment in mind (e.g., minimal features, low compute)

The project advances both user authentication and user identification on wearable head-mounted displays (HMDs) through gesture-based behavioral modeling - contributing to the broader field of human-centered, privacy-preserving AI.

📎 Links

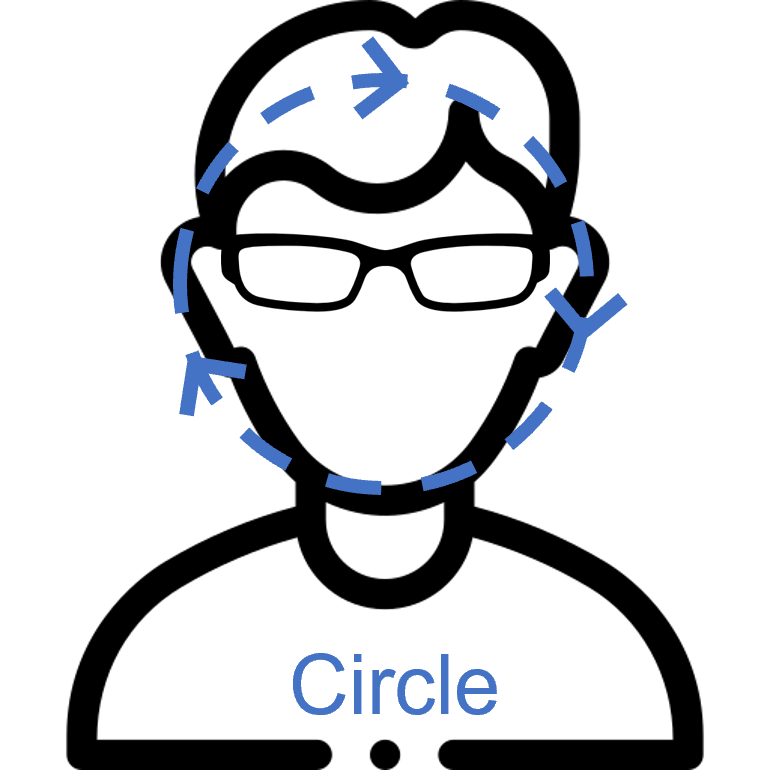

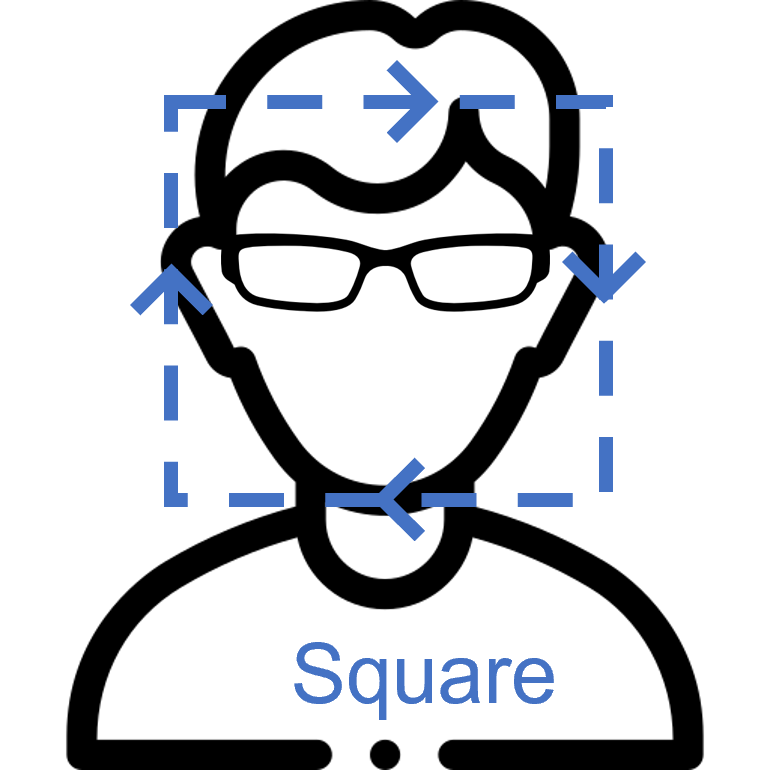

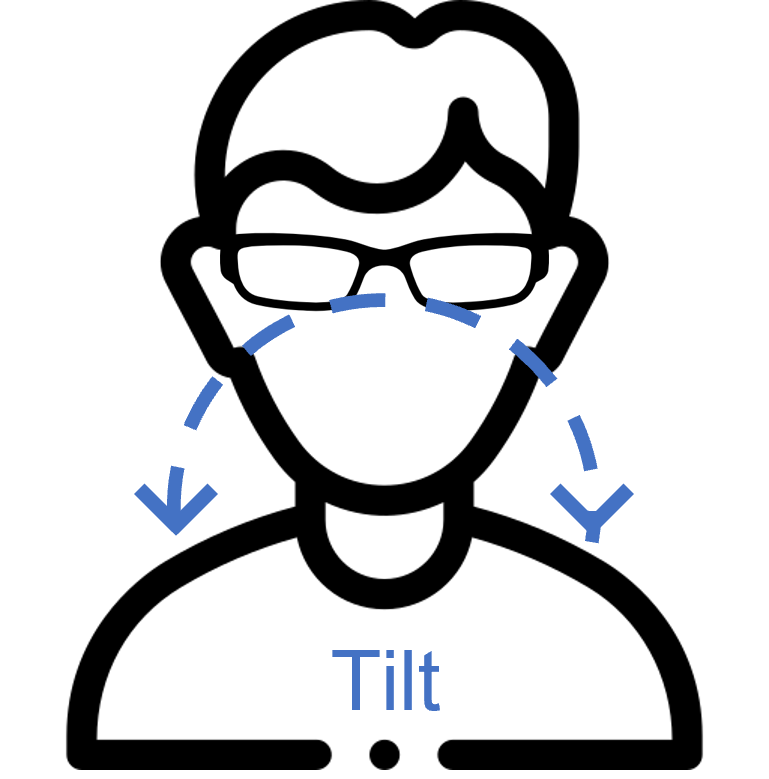

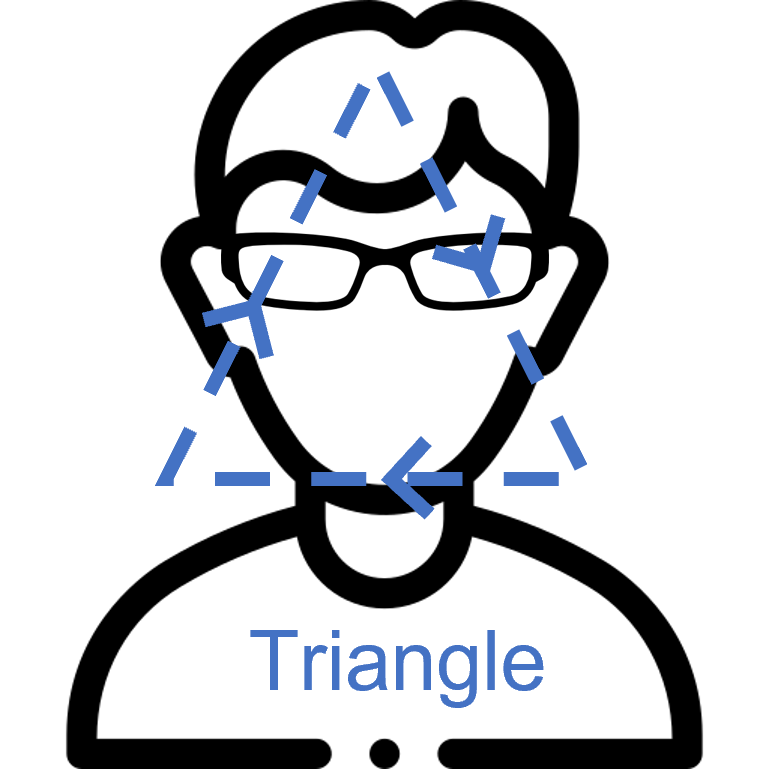

👤 Head Gestures

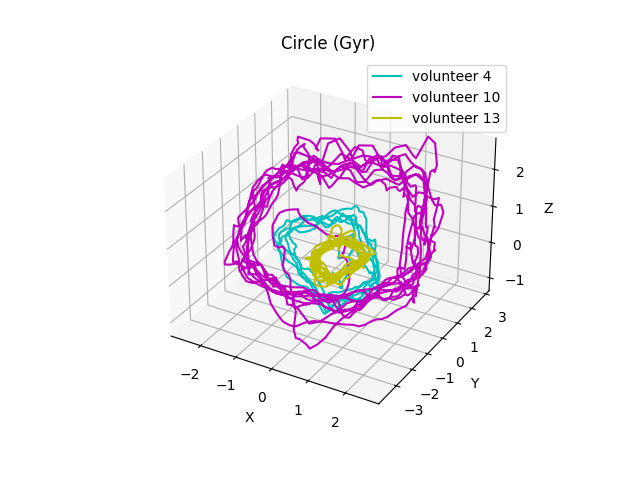

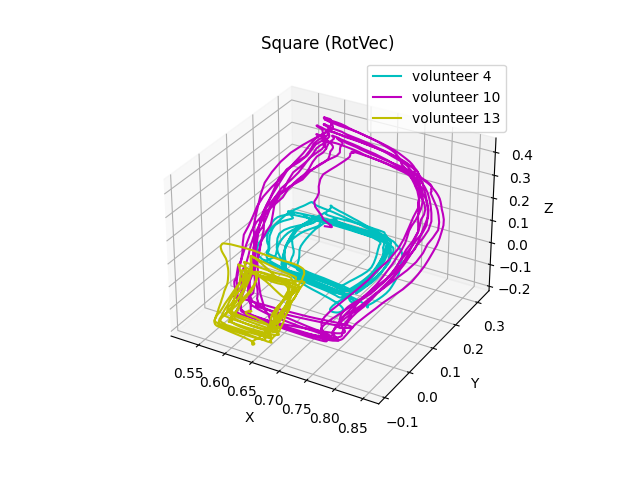

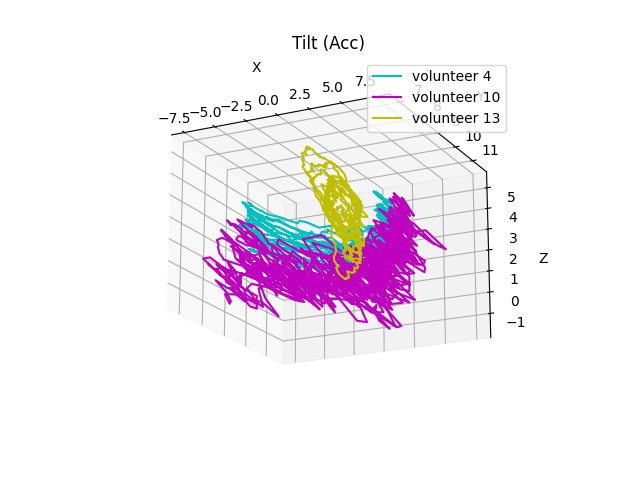

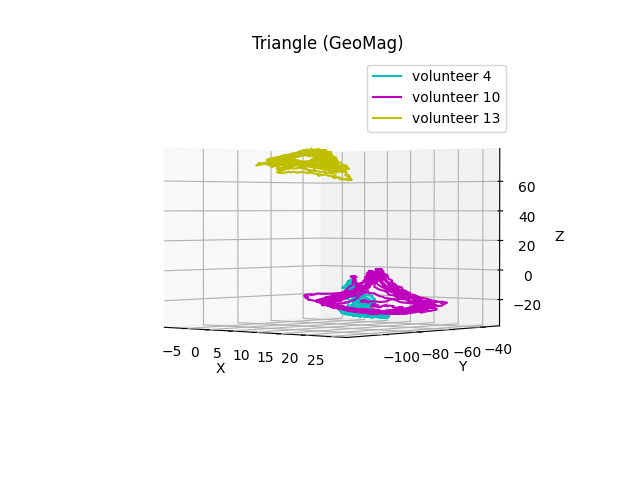

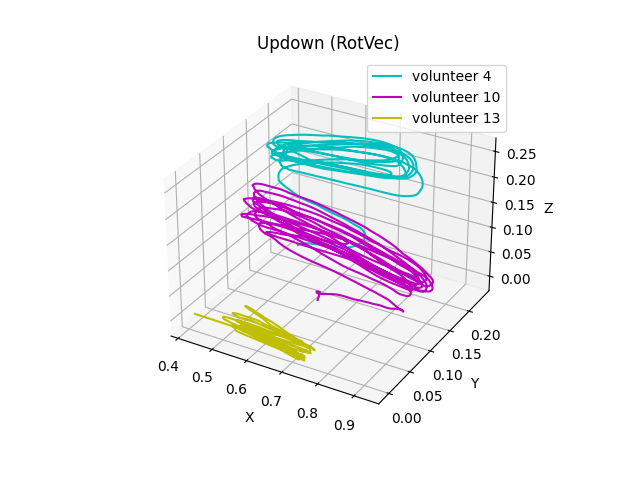

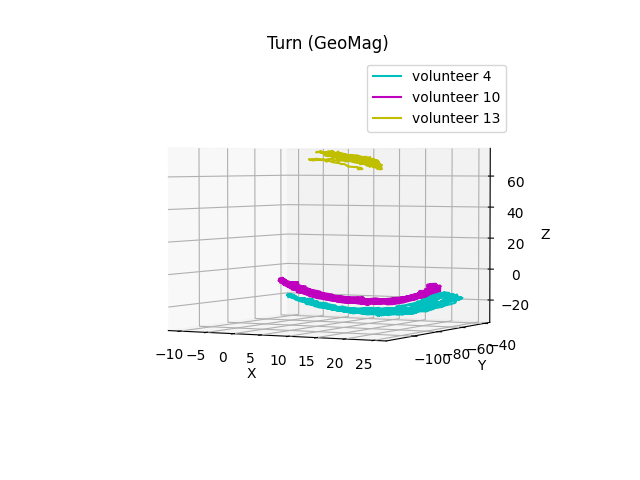

📈 3D Sensor Plots

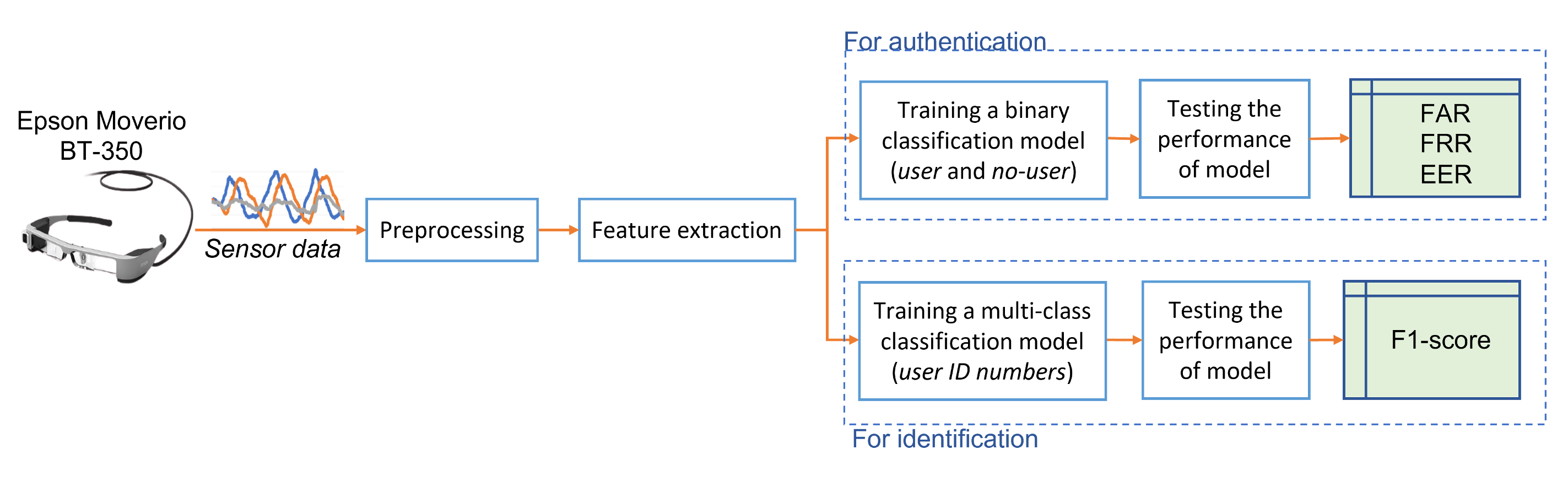

🧊 Architecture Overview

The system processes motion data from smart glasses to authenticate and identify users based on their head gestures. Data is collected from four onboard sensors (accelerometer, gyroscope, rotation vector, geomagnetic), segmented into 1-second windows, and represented using simple statistical features. We frame authentication as a binary classification task and identification as multi-class, evaluating models like Adaboost and Random Forest. With minimal preprocessing and lightweight models, the system achieves up to 99.3% F1-score and 1.3% EER using only two or three sensors, enabling accurate, on-device user recognition.

3. Performance Evaluation

- Authentication metric: Equal Error Rate (EER)

- Identification metric: Weighted F1-score

- Best results: 1.3% EER with Adaboost, 99.3% F1-score with Random Forest

📊 Results

We modeled:

- Authentication as binary classification (user vs. impostor)

- Identification as multi-class classification (which user is wearing the glasses?)

Using lightweight statistical features (mean, min, max) over 1s windows, we achieved:

| Task | Best Performance | Classifier | Sensors Used |

|---|---|---|---|

| Authentication | 1.3% Equal Error Rate | Adaboost | Rotation Vector + GeoMag |

| Identification | 99.3% F1-score | Random Forest | Acc + RotVec + GeoMag |

⚙️ Technical Stack

- Hardware: Epson Moverio BT-350 Smart Glasses

- Sensors: Accelerometer, Gyroscope, Rotation Vector, Geomagnetic

- Features: Mean, Min, Max in 1s windows

- Models: Adaboost, Random Forest, SVM, MLP

- Tools: Python, Scikit-learn, SMOTE, Matplotlib